14 min read

Overview

In blockchain development, real-time data is critical for building responsive, interactive applications. Traditionally, developers have used WebSocket to handle real-time communication, and while WebSocket is effective in many scenarios, it comes with limitations that can make scaling and reliability challenging as your application grows. That’s where Streams come in.

QuickNode's Streams is a purpose-built solution designed to address the challenges of handling real-time and historical blockchain data at scale, while offering additional features to simplify your development.

In this guide, we’ll explore the similarities and differences between WebSocket and Streams, and help you understand how Streams can solve some of the common issues developers face with WebSocket. By the end, you'll be equipped with the knowledge to make an informed decision on which solution is best suited for your application's real-time data needs.

What is WebSocket?

WebSocket is a communication protocol that provides real-time, bidirectional data transfer between a client and a server. Unlike traditional HTTP requests, WebSocket maintains a persistent connection, allowing for continuous data exchange without continuously making requests.

How WebSocket Works in Blockchain

In a blockchain environment, WebSockets are commonly used for subscribing to real-time events such as new blocks, transactions, or smart contract events. They enable low-latency communication, making them ideal for applications that need to react to blockchain events quickly.

Here's a simplified flow:

- The client establishes a WebSocket connection to a blockchain node.

- The client sends a subscription request for specific events.

- The node sends real-time updates to the client as events occur.

- The client processes the incoming data in real-time.

What is Streams?

Streams is a blockchain data streaming and ETL (Extract, Transform, Load) service built to make real-time and historical blockchain data easily accessible for developers. It allows you to reliably stream live blockchain data or backfill historical data to your preferred destination, whether it's an API (e.g., Webhook), an S3-compatible storage bucket, or a database (e.g., PostgreSQL, Snowflake)

Streams is designed to simplify the management of blockchain data, whether real-time or historical, allowing developers to focus on their application logic.

Key Features of Streams

- Real-Time Data Streaming: Receive live blockchain data as it happens, with no need to poll JSON-RPC endpoints constantly.

- Historical Data Backfilling: Backfill historical blockchain data using prebuilt templates with clear cost and completion time estimates provided upfront.

- Customizable Data Filters: Only get the data that's important to you by applying custom filters on your Stream. This allows you to use JavaScript code (ECMAScript) to personalize the data from your Stream before delivery, allowing you to set rules or detect patterns such as tracking events, transactions, decoding data, etc.

- Cost-Efficient: Streams are priced based on the volume of data delivered to your destination. Free plans have a minimum billable size of 2.5KB per filtered block, while paid plans charge only for the exact data size received, helping you maintain predictable and scalable costs—even in high-volume applications.

- Multi-Chain Support: Streams work across multiple blockchain networks, simplifying multi-chain development.

- Data Consistency: With built-in reliability mechanisms like reorg handling, you will ensure data consistency and won’t miss any blocks or transactions, even in case of network reorganizations. More info can be found here.

- Compression: Optimize data transmission with compression to minimize bandwidth usage.

- Functions Integration: Combine Streams with Functions to create powerful, serverless APIs or real-time data processing pipelines without managing any infrastructure.

Streams with Filters

Streams with Filters lets you receive only the blockchain data you need, reducing data volume and costs. Using JavaScript filters, you can modify data before it reaches your destination. Filters also include access to Key-Value Store, allowing you to store data from your Stream and access it via REST API.

function main(stream) {

const data = stream.data

var numberDecimal = parseInt(data[0].number, 16);

var filteredData = {

hash: data[0].hash,

number: numberDecimal

};

return filteredData;

}

This filter ensures that you receive only block numbers and block hashes, rather than the entire block dataset. You can find several other Filters examples (e.g., ERC-20 transfers, tracking a specific address, etc.) here.

Streams with Functions

While Streams provide real-time and historical blockchain data, combining it with Functions enables you to process and enhance that data on the fly. Whether you’re creating APIs or performing complex operations on blockchain data, Functions add another layer of flexibility and customization, all without the need to manage servers.

Here’s why Functions are the perfect complement to Streams:

-

API Ready: Functions are automatically exposed as APIs, allowing you to easily integrate them with your front-end or other services. You can transform real-time blockchain data into an API that is ready for immediate use.

-

Serverless Flexibility: With Functions, you can perform complex operations on blockchain data without worrying about managing servers or scaling. Functions auto-scale with demand, meaning you only pay for what you use.

-

External Library Support: Functions give you access to external libraries, both web3 and utility-related, right within your runtime environment. View the complete list of supported libraries here.

-

Filter and Transform Data: Functions allow you to filter specific data from Streams, such as transactions or events, and transform encoded blockchain data into a format that fits your application’s needs.

-

Enhance Data: Need to enrich your data before routing it to its destination? Functions enable you to make additional API calls or retrieve supplementary data, providing extra context to the blockchain data before passing it on.

-

Key-Value Store: Access Key-Value Store, letting you store and retrieve data based on your Functions actions.

Example Streams and Functions Use Cases

Below are some examples of how Streams and Functions work together. For a detailed Functions library, click here.

-

Custom APIs for DeFi or NFT Platforms: By combining Streams and Functions, you can create custom APIs that provide real-time or aggregated data, such as the latest transaction stats for DeFi platforms or live updates for NFT trades.

-

Automated Alerts: Set up a Stream to monitor important blockchain events and use a Function to trigger notifications in real-time, routing them to services like PagerDuty, Discord, or Google Sheets. These use cases are well represented in the Functions Library, where you’ll find examples like Stream to Discord or Stream to Google Sheets.

-

Data Analysis: Process blockchain data in real-time by analyzing Stream events, storing results in Key-Value Store, and retrieving them later in your Function for additional processing or serving via REST API. This workflow is ideal for tracking and serving metrics and transactions or building custom data-enriched API solutions.

How Streams Works

Streams operate on a publish-subscribe model, but with added reliability and features:

- Data Ingestion: Streams continuously captures blockchain data from nodes, including blocks, transactions, logs, and traces.

- Filtering and Processing: Apply custom JavaScript filters to process and transform the data in real-time, allowing you to extract only the information you need.

- Delivery Mechanism: Processed data is pushed to your specified destination (e.g., Webhook, S3-compatible storage bucket, or databases such as PostgreSQL and Snowflake) in near real-time or in configurable batches.

- Reorg Handling: Streams automatically detects and manages chain reorganizations, ensuring data consistency and accuracy.

WebSocket vs. Streams

In this section, we'll compare WebSocket and Streams topic-by-topic, breaking down their key features and use cases, with a highlight on most common problems with WebSocket.

Feature Comparison

| Feature | WebSocket | Streams |

|---|---|---|

| Data Delivery Model | Subscription-based model where the client maintains an open connection. | Push-based model where data is sent to a specified destination without maintaining a constant connection. |

| Data Types and Scope | Designed for real-time data. | Supports both real-time and historical data. |

| Historical Data | Requires separate API calls for historical data. | Supports backfilling of historical data alongside real-time data. |

| Reliability and Guarantees | Best-effort delivery. Data can be missed if the connection drops. | Guaranteed delivery with exactly-once semantics, ensuring no data loss even during disconnections. |

| Data Transformation and Filtering | Limited to client-side filtering of received data. | Powerful server-side filtering and transformation, and enrichment capabilities through the use of Functions. capabilities. |

| Blockchain Reorganization Handling | No built-in handling for chain reorganizations. | Automated detection and handling of chain reorganizations. |

| Multi-chain Support | Typically requires separate connections for each blockchain. | Requires multiple streams for multiple chains but provides an easy setup workflow with duplication support. |

| Destination Flexibility | Data is received directly by the client application. | Supports multiple destination types including webhooks, S3 buckets, and databases, with additional routing logic via Functions. |

| Bidirectional Communication | Ideal for applications where bidirectional communication is necessary. | Designed for push-based, one-directional data flow to multiple destinations. |

| Compression | No built-in compression. | Streams offer built-in data compression, reducing payload size and costs significantly for some destinations. |

| Pricing | Priced based on the number of open connections or requests. | Priced based on the volume of data processed and delivered. |

| API Ready | No built-in API. | Automatically exposed as APIs. |

Performance Considerations

| Performance Consideration | WebSocket | Streams |

|---|---|---|

| Latency | Generally offers lower latency for small-scale, simple use cases. | Optimized for high-volume data scenarios, easily handling thousands of messages per second. |

| Scalability and Throughput | Limited by the number of open connections. | High throughput (up to 3000+ messages per second). |

| Resource Utilization | Requires the client to maintain open connections and process all incoming data. | Offloads much of the work to QuickNode's infrastructure, reducing client-side resource consumption. |

Use Case Scenarios

| Sample Use Cases | WebSocket | Streams |

|---|---|---|

| Interactive applications | Ideal for interactive apps that rely on two-way communication, such as gaming or collaborative tools. | Focuses on one-way data streams |

| Data analytics apps | Not applicable since WebSocket doesn't support historical data. | Ideal for data analytics apps that require both real-time and historical data. |

| Live alerts | Works well for live alert systems. | Works well for live alert systems. |

| Explorers | Not applicable due to lack of historical data and no built-in reorg handling | Perfect for explorers, as they support historical data and can handle blockchain reorgs |

| Custom APIs | Complex logic and real-time updates difficult to implement. | Build custom APIs that aggregate, filter, and enhance data from Streams using Functions. |

Most Common Problems with WebSocket

Although WebSocket are a powerful tool for real-time communication, they come with several challenges:

- Handling Disconnections

WebSocket can disconnect unexpectedly due to network issues or server failures. Reconnecting and ensuring no data is lost during downtime requires manual implementation, such as reconnection logic and queueing missed events. Streams offer built-in reliability with configurable timeout settings (e.g., 30-second thresholds), customizable retry attempts, and pause mechanisms after a specified number of retries.

If you need to deal with this kind of disconnection, check the Handling Websocket Drops and Disconnections article.

- Bandwidth and Data Management

Because WebSocket often deliver more data than needed, it can lead to excessive bandwidth usage. Developers must filter this data client-side, which can be inefficient, especially for high-volume applications. Streams offer server-side filters.

- Synchronizing Real-Time with Historical Data

WebSocket typically provide only real-time data. If you need to sync real-time data with historical data (e.g., in a blockchain explorer), managing separate data pipelines for historical and real-time data adds complexity to your architecture. Streams offer both historical and real-time data.

Now that we've discussed some of the common challenges developers face when using WebSocket, let’s explore quickly how to implement both WebSocket and Streams.

Implementation Examples

Codes below are code samples intended to give you a quick overview of how each setup works. Please note that these are not complete, production-ready solutions but rather starting points to help you get up and running with WebSocket and Streams.

Be sure to replace placeholders like YOUR-QUICKNODE-WSS-URL and YOUR-QUICKNODE-API-KEY with yours.

Use WebSocket with Ethers.js

This example demonstrates how to set up a simple WebSocket connection using ethers.js to listen for new blocks on the Ethereum network. You'll need your QuickNode WebSocket endpoint, which you can get from the QuickNode dashboard.

You can now access Logs for your RPC endpoints, helping you troubleshoot issues more effectively. If you encounter an issue with your RPC calls, simply check the logs in your QuickNode dashboard to identify and resolve problems quickly. Learn more about log history limits on our pricing page.

const { WebSocketProvider } = require('ethers')

const wsProvider = new WebSocketProvider('YOUR-QUICKNODE-WSS-URL')

wsProvider.on('block', blockNumber => {

console.log('New block:', blockNumber)

})

// Handle disconnections

wsProvider.websocket.on('close', () => {

console.log('WebSocket disconnected')

// Implement reconnection logic here

})

This basic example shows how to listen for new blocks in real time. If the WebSocket connection drops, you'll need to implement custom reconnection logic, as WebSocket does not automatically handle this for you.

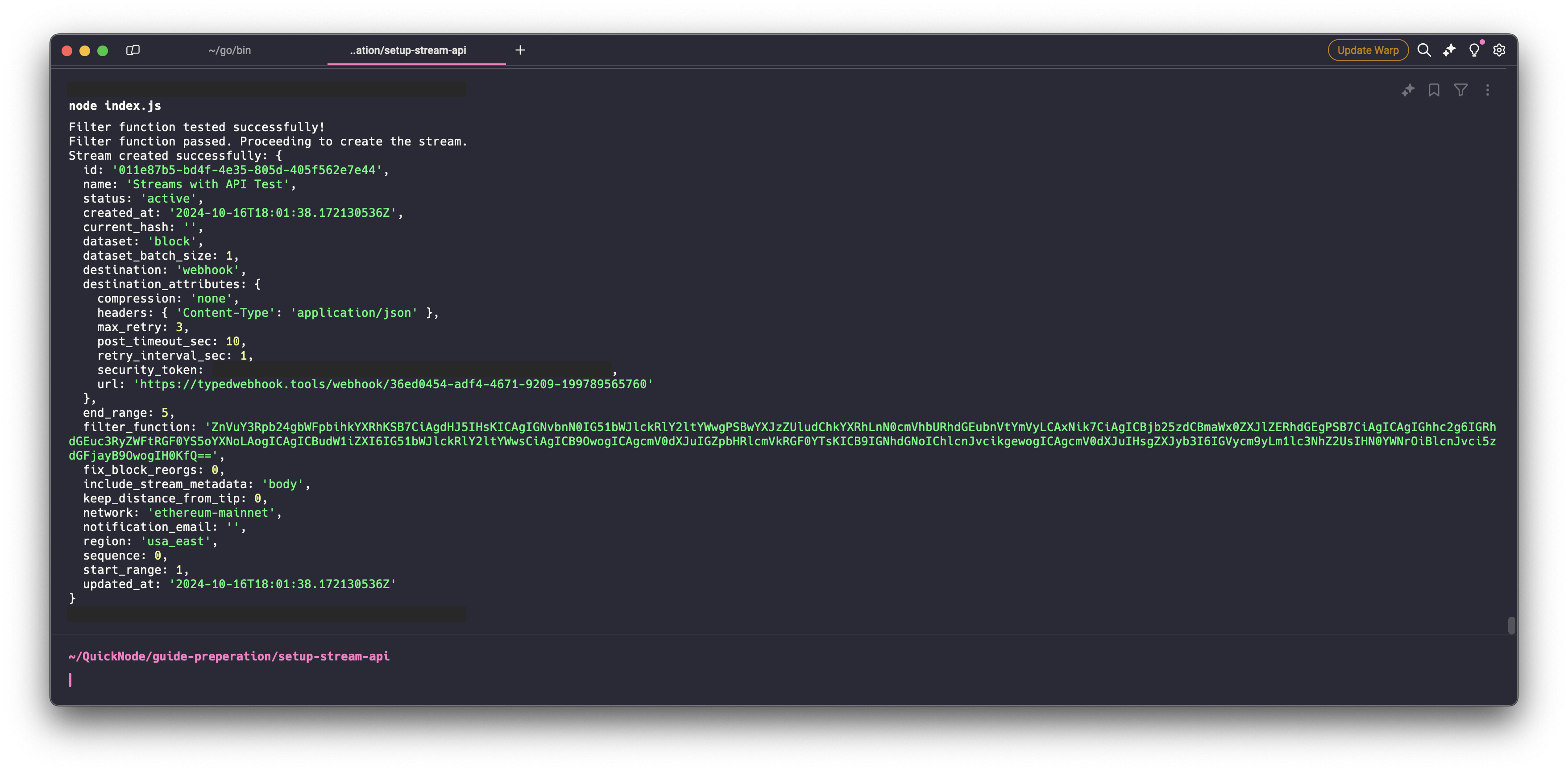

Create a Stream using API

In this example, we’ll set up a Stream using the QuickNode Streams API. This Stream listens to Ethereum mainnet and sends data to a specified webhook. You’ll need to configure your API key, webhook URL, and the block range for streaming.

In the code below, we first define a main() function, which is our filtering function that processes data and converts block numbers from hexadecimal to decimal format. The core of the logic revolves around creating a Stream via QuickNode's API, but before creating the Stream, we perform a precheck using the testFilterFunction().

The testFilterFunction() sends a base64-encoded version of the filter function (in this case, the main() function) to the QuickNode API to validate its behavior. It checks the filter against sample block data to ensure it works as expected.

If the test passes, the setupQuickNodeStream() function proceeds to create a new Stream with the validated filter. If the test fails, the Stream is not created, and an error is logged.

Click here to expand the code snippet

const axios = require("axios");

const QUICKNODE_API_KEY = "YOUR-QUICKNODE-API-KEY"; // Replace with your actual API key

const WEBHOOK_URL = "YOUR-WEBHOOK-URL"; // Replace with your ngrok URL

function main(stream) {

try {

const data = stream.data

const numberDecimal = parseInt(data[0].number, 16);

const filteredData = {

hash: data[0].hash,

number: numberDecimal,

};

return filteredData;

} catch (error) {

return { error: error.message, stack: error.stack };

}

}

async function testFilterFunction(base64FilterFunction) {

const hash =

"0xb72704063570e4b5a5f972f380fad5e43e1e8c9a1b0e36f204b9282c89adc677"; // Hash of the test block

const number = "17811625"; // Number of the test block

let data = JSON.stringify({

network: "ethereum-mainnet",

dataset: "block",

filter_function: base64FilterFunction,

block: number,

});

try {

const response = await axios.post(

"https://api.quicknode.com/streams/rest/v1/streams/test_filter",

data,

{

headers: {

accept: "application/json",

"Content-Type": "application/json",

"x-api-key": QUICKNODE_API_KEY,

},

}

);

if (

response.status === 201 &&

response.data.hash == hash &&

response.data.number == number

) {

console.log("Filter function tested successfully!");

return true;

} else {

console.error("Error testing filter function:", response.status);

return false;

}

} catch (error) {

console.error(

"Error testing filter function:",

error.response ? error.response.data : error.message

);

throw error;

}

}

async function setupQuickNodeStream(startSlot, endSlot) {

const filterFunctionString = main.toString();

const base64FilterFunction =

Buffer.from(filterFunctionString).toString("base64");

// Test the filter function first

const testResult = await testFilterFunction(base64FilterFunction);

if (!testResult) {

console.error("Filter function failed. Stream not created.");

return;

}

console.log("Filter function passed. Proceeding to create the stream.");

const streamConfig = {

name: "Streams with API Test",

network: "ethereum-mainnet",

dataset: "block",

filter_function: base64FilterFunction,

region: "usa_east",

start_range: startSlot,

end_range: endSlot,

dataset_batch_size: 1,

include_stream_metadata: "header",

destination: "webhook",

fix_block_reorgs: 0,

keep_distance_from_tip: 0,

destination_attributes: {

url: WEBHOOK_URL,

compression: "none",

headers: {

"Content-Type": "application/json",

},

max_retry: 3,

retry_interval_sec: 1,

post_timeout_sec: 10,

},

status: "active",

};

try {

const response = await axios.post(

"https://api.quicknode.com/streams/rest/v1/streams",

streamConfig,

{

headers: {

accept: "application/json",

"Content-Type": "application/json",

"x-api-key": QUICKNODE_API_KEY,

},

}

);

console.log("Stream created successfully:", response.data);

return response.data.id;

} catch (error) {

console.error(

"Error creating stream:",

error.response ? error.response.data : error.message

);

throw error;

}

}

setupQuickNodeStream(1, 5).then((res) => console.log(res));

This example demonstrates how to create a Stream programmatically. You can customize it further by adjusting the filters and destination settings.

Create a Stream on the Frontend

You can also create and manage Streams directly from the QuickNode dashboard without needing to write code. Here’s how to set up a Stream via the dashboard:

- Go to your QuickNode dashboard.

- Click Create Stream or select one of the available Stream templates.

- Configure the network, dataset, and filters for your Stream.

- Set the destination for the data, such as a webhook or S3 bucket.

This method allows you to quickly deploy a Stream without any code.

Conclusion

While WebSocket may be suitable for real-time blockchain data in some cases, it often requires high effort to maintain, especially when dealing with reconnection logic and handling disconnections. On the other hand, Streams provide a more robust, scalable, and feature-rich alternative, particularly for complex or high-volume applications.

By understanding the strengths and limitations of each, you can make an informed decision on which solution is best for your project. Whether you’re building with WebSocket for simplicity or Streams for reliability and scalability, QuickNode has the tools and infrastructure to support your all needs.

If you have questions, any ideas or suggestions, please contact us directly. Also, stay up to date with the latest by following us on Twitter and joining our Discord and Telegram announcement channel.

We ❤️ Feedback!

Let us know if you have any feedback or requests for new topics. We'd love to hear from you.

Further Resources

- Documentation: QuickNode Streams

- Technical Guide: How to Build a Blockchain Indexer with Streams

- Technical Guide: How to Backfill Ethereum ERC-20 Token Transfer Data

- Technical Guide: How to Create Solana WebSocket Subscriptions

- Technical Guide: How to Manage WebSocket Connections With Your Ethereum Node Endpoint

- Other Streams-related guides